COVID RISK DETECTION

COVID RISK DETECTION

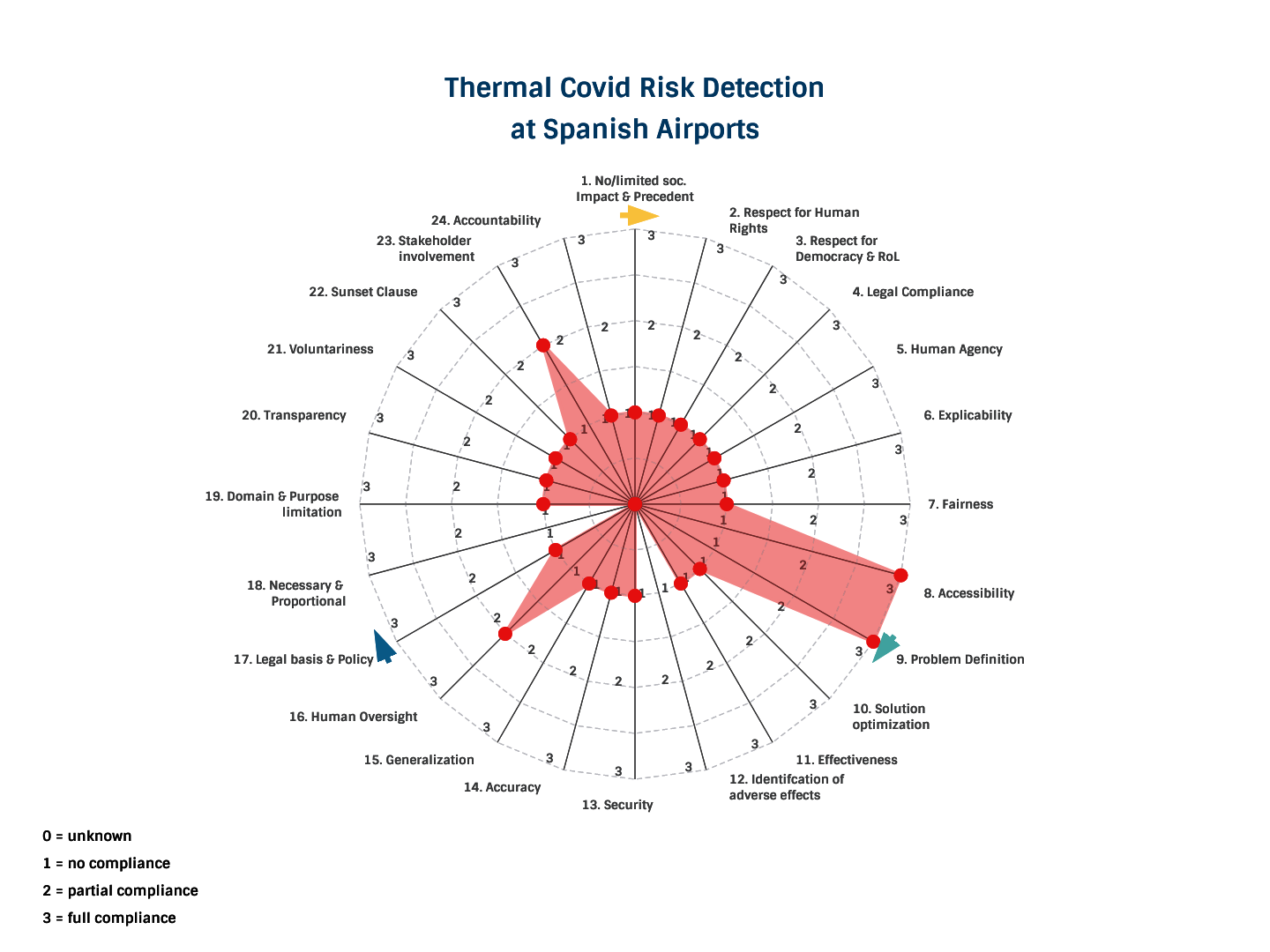

- Thermal cameras are being used by governments and companies to detect and prevent the spread of covid-19.

- Europe introduced more surveillance measures than any other region since the start of the pandemic.

- Thermal cameras are currently being used in airports, public transit hubs, offices, retail businesses, health facilities, and on public streets.

- Actual public or private use of these systems difficult to determine

- The demands for these devices have increased dramatically as many European countries have joined the market since the start of the pandemic.

- AI-based thermal cameras are susceptible to errors in temperature readings, particularly when cameras are used to scan multiple people in crowds.

- The European Centre of Disease Prevention and Control has defined it as a “high-cost, low-efficient measure”.

- Thermal cameras can only predict surface body temperature, not internal body temperature.

- The detection of surface body temperature is not a direct indication of covid infection. They miss the detection of asymptomatic people, or those going through an incubation phase.

- This type of AI-based surveillance negatively impacts citizens and society.

- In any context, they are a highly intrusive form of surveillance, that creates a chilling effect on the individual.

- As an undesired precedent, the use of thermal cameras normalizes (through justifying attempts) the use of constant surveillance of citizens and the collection of unauthorised personal data in the future.

- There is ample impact on the human right to privacy, autonomy, and democracy.

- When used in public spaces, compliance with the GDPR is difficult if not impossible (e.g. people cannot consent).

- The use of thermal cameras in public spaces are not in compliance with GDPR.

- Responsibility to comply with policy and legislation fall upon the controller of the AI, yet there seems to be no clear and specific legislation put in place for each of these AI-based surveillance devices.

- It is unknown whether people’s data is used for other purposes e. training the AI, statistical analyses.

- It is unknown whether additional data is collected aside from what they are strictly designed for, or from what is strictly necessary.

The use of thermal cameras has been justified by many European countries amid the pandemic. Yet, scientists argue that they are not better at detecting internal body temperature than alternatively, less-invasive solutions e.g. infrared thermometers. Given the high cost of indirectly detecting people infected with covid-19 (whilst negatively impacting privacy, autonomy, and democracy) we see no acceptable trade-off at this stage for the use of AI-based thermal cameras.