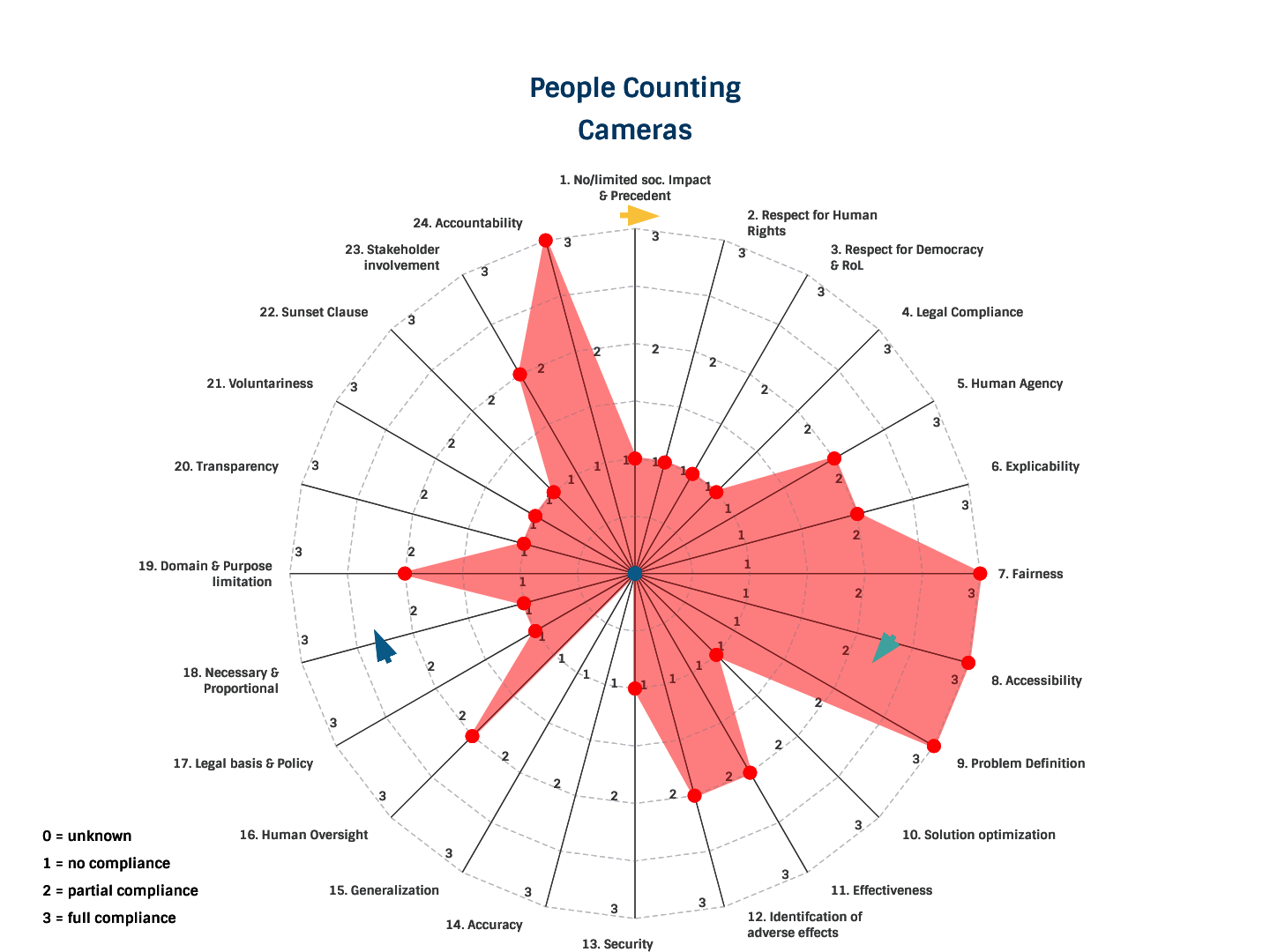

PEOPLE COUNTING CAMERAS

PEOPLE COUNTING CAMERAS

- Since the start of the pandemic, there has been an increased use of AI-based surveillance technology to monitor the number of people in establishments and enforce social distancing.

- The technology is being applied in shops, schools, and public spaces.

- MOBOTIX, LinkVision, Hikvision, V-count and Canon are a few of the many companies offering this technology.

- AI-based counting technologies are part of the 43 AI-based surveillance measures that have been adopted in 27 countries. Europe introduced more surveillance measures than any other region.

- Companies offering AI-driven counting sensors claim they provide an efficient way of counting people in real-time to help with social distancing.

- Different technologies can be used for the same purpose (counting people) but diverge in their efficiency and limitations. WIFI-tracking and thermal cameras, for example, are more inefficient than optical vision sensors, however, optical vision sensors suffer from issues concerning privacy and data security.

- Depending on the methodology and extra features, people counting technologies differ in their intrusiveness.

- People counting cameras, for example, can contain additional AI-based features i.e. face recognition or gender detection features, that makes it more intrusive than systems that simply count people. Such features exacerbate their negative impact on privacy and autonomy due to the processing of sensitive personal data.

- People counting technologies create an unavoidable ‘chilling’ effect especially if applied to public spaces.

- AI-based surveillance cameras installed in public spaces are not in compliance with GDPR.

- Although there seems to be no clear and specific legislation put in place for these AI-based surveillance technologies, the responsibility to comply with policy and current legislation falls upon the controller of the AI.

- Given the extensive capabilities of some people-counting technologies, it is unknown whether additional data is collected aside from what they are strictly designed for, or from what is strictly necessary.

The use of people counting technologies has been justified by many European countries amid the pandemic to aid with social distancing measures. For example, in airports and train stations, analysing the number of people can help optimize passenger flow, make real-time queue management and improve operations. However, some of these technologies include intrusive AI-based features that also implement face recognition or gender detection, having a significant trade-off on people’s privacy and autonomy. Furthermore, the deployment of these systems in public spaces without clear communication and transparency about the system’s workings do not fall under the scope of some legal or policy frameworks such as the GDPR.