Vaccine Hesitancy Chatbot

Vaccine Hesitancy Chatbot

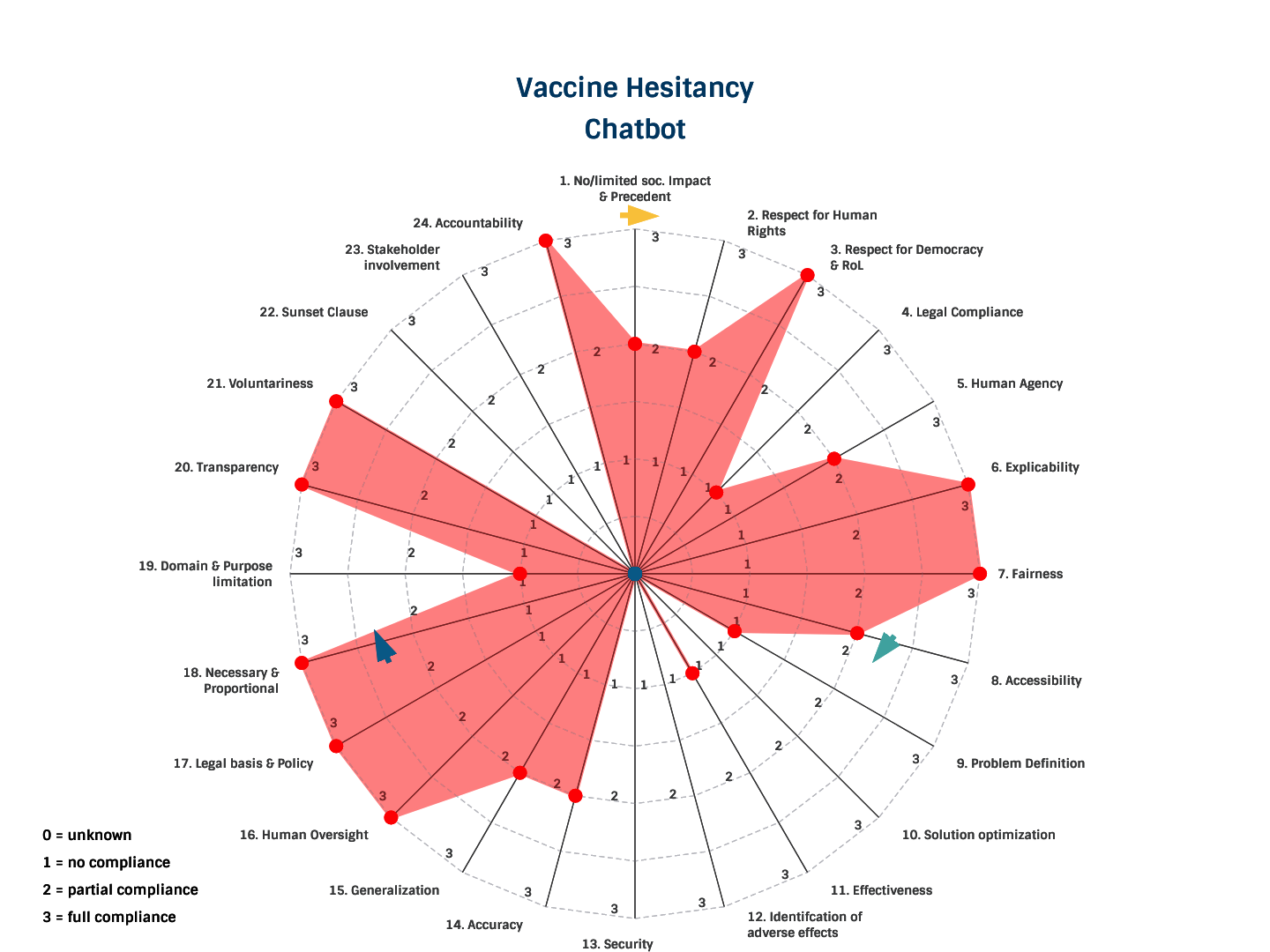

- The vaccine hesitancy chatbots are a possible solution to a clear problem, though their effectiveness has to be researched more.

- The evaluated chatbot seems to work well and has a mechanism in place in case it malfunctions, making it more robust.

- However, there is no indication whether the risk to adverse effects is being researched, or whether any measures to secure the system are in place.

- The fact that the evaluated chatbot is very strongly regulated by humans, who maintain its working and answers and are able to intervene, largely makes up for that.

- Even though no complete human rights impact assessment was undertaken, the deployment of the evaluated chatbot does not seem to negatively impact human rights.

- It is claimed that using the evaluated chatbot is 100% anonymous. However, since personal data is still being processed and the application is accessible for European users, compliance with the GDPR is necessary. This should be improved regarding obtaining valid consent.

- The system is fair, not intrusive, accessible to the target group and it is clear where the answers it gives are based on.

- However, there is a slight risk for human autonomy as it tries to push the user in a certain direction, trying to change their beliefs. Still, this is being done by providing them well-researched, government-approved information.

- The deployment of the evaluated chatbot is necessary as the vaccine acceptance rates are still not high enough. Also, deploying the chatbot is relatively low risk and therefore proportional because of what it can gain.

- The creators of the evaluated chatbot are transparent about its design, used data, training and maintaining processes, and overall workings of the application.

- Using the chatbot is 100% voluntary and anonymous.

- The application is not limited to use for other domains or purposes, nor is there any indication of a Sunset clause. However, this is not necessarily a bad thing as chatbots like this can support people in making informed decisions about other subjects too (for example blood donation or quitting smoking).

- Since there is no indication of stakeholder involvement, it is unclear whether people actually want an application like this, will use this or trust answers given by a chatbot.