FACE MASK DETECTION

FACE MASK DETECTION

- Numerous face mask detection applications are being developed

- Developers widely share methods on how to train a face mask detection system (e.g. via GitHub)

- Number of commercial organisations actively offer face mask detection systems commercially

- Actual public or private use of these systems difficult to determine

- Indications that face mask detection is tested and/or used covertly, e.g. by governments.

- Accurate face mask detection is difficult to achieve, due to the lack of generalisability of the system (research)

- It works well in a controlled environment where people are well lit, look straight into the camera and pass at an even pace, wearing the same mask.

- It is well known that these systems are less accurate when put into real life settings (e.g. are poorly lit, crowds, people wearing scarfs, hoodies caps or glasses etc.)

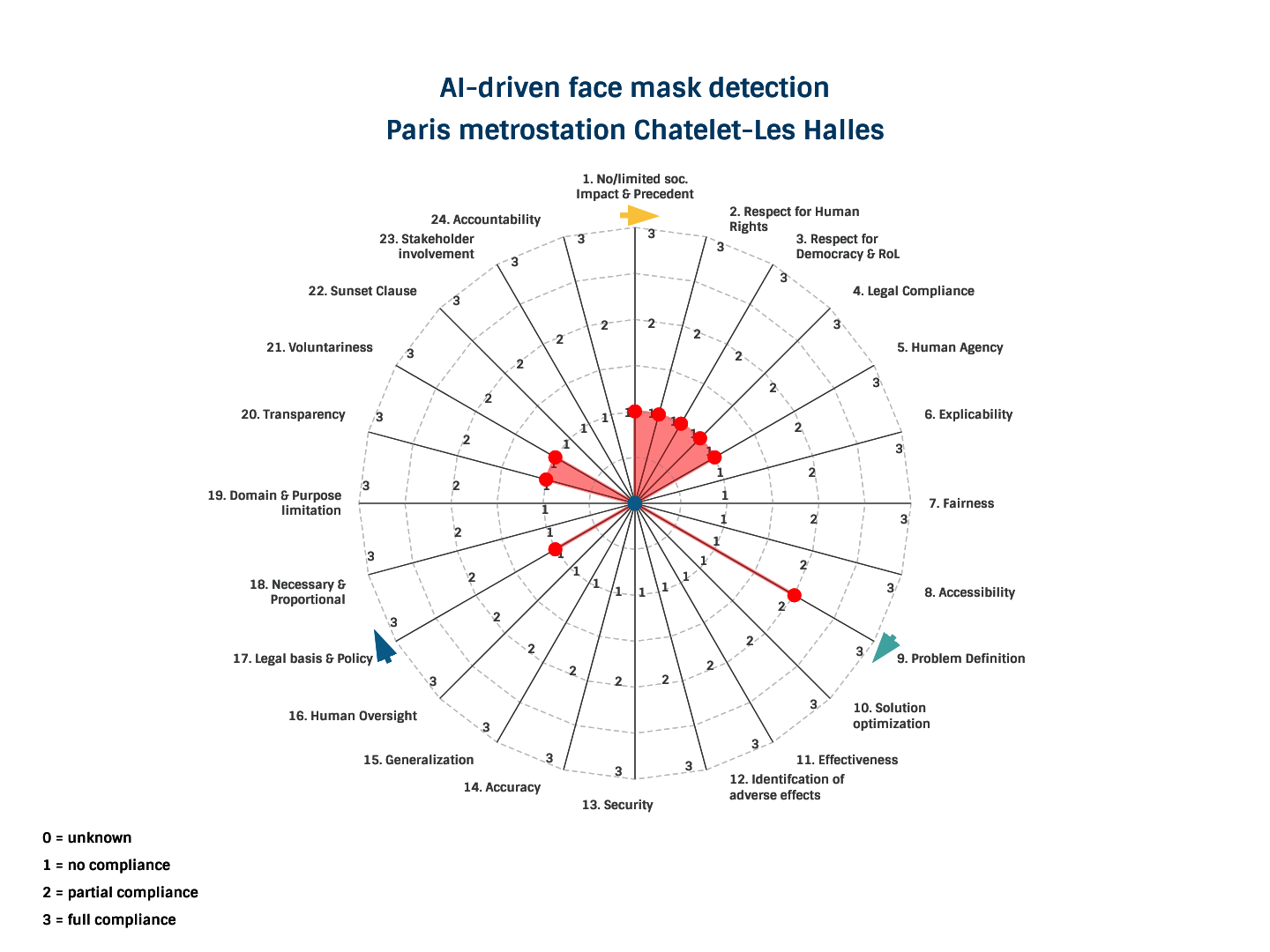

- We consider this type of AI use to have a predominantly negative impact on citizens and society.

- It could set an undesired precedent for the future (more (acceptance of) surveillance.

- There is ample impact on the human right to a private life and democracy.

- Compliance with the GDPR is difficult if not impossible (e.g. people cannot consent).

- There is impact on human agency and free will where submission to the system can only be avoided by not visiting certain places.

- Unable to determine whether appropriate legal basis exists, because actual use is unknown (‘under the radar’)

- Unknown whether there is purpose limitation and for example a sunset clause in place.

- Any form of publicly available documentation on the actual use of face mask detection systems is lacking

- Submission to these systems is not voluntary (because public is not made aware of the use of the system).

Given the intrusiveness of facial recognition applications and the fact that multiple governments and legislative bodies have implemented, are developing or are calling for strict regulation or even a ban on facial recognition, we see no acceptable trade off at this stage for the use of face mask detection camera’s.