AI can contribute to tackling the Corona-crisis

AI can play an important role during this crisis. AI applications can contribute to the understanding of the spread of the virus, to the search for a vaccine and to treatment of COVID-19. AI can also guide policy measures and gain insight in their long-term impact on society and the economy.

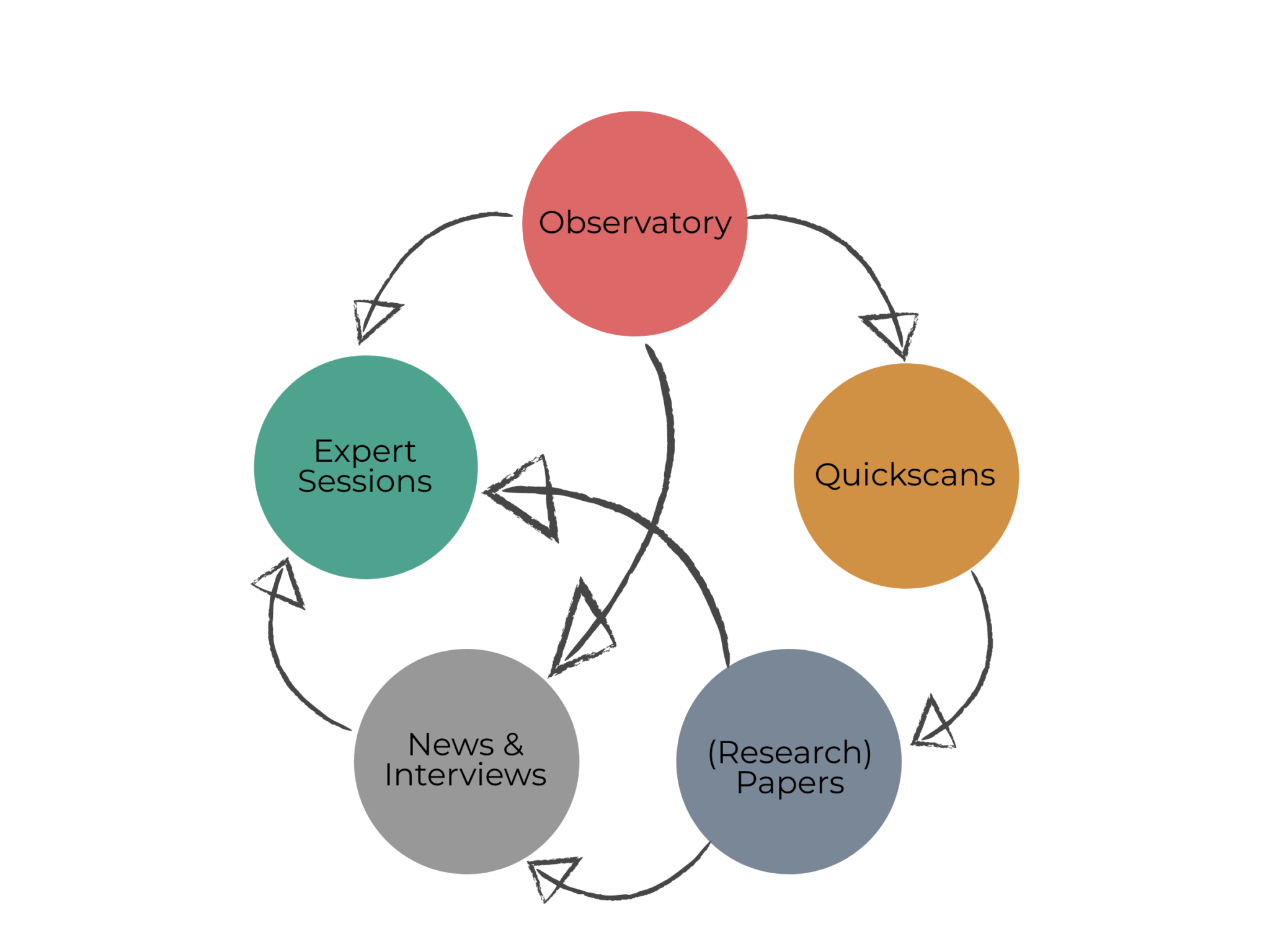

Turning magazine

Turning magazine